A mess.

Pi4 with 2TB SSD running:

- Portainer

- Calibre

- qBittorrent

- Kodi

HDMI cable straight to the living room Smart TV (which is not connected to the internet).

Other devices access media (TV shows, movies, books, comics, audiobooks) using VLC DLNA. Except for e-readers which just use the Calibre web UI.

Main router is flashed with OpenWrt and running DNS adblocker. Ethernet running to 2nd router upstairs and to main PC. Small WiFi repeater with ethernet in the basement. It’s not a huge house, but it does have old thick walls which are terrible for WiFi propogation.

Bad. I have a Raspberry Pi 4 hanging from a HDMI cable going up to a projector, then have a 2TB SSD hanging from the Raspberry Pi. I host Nextcloud and Transmission on my RPi. Use Kodi for viewing media through my projector.

I only use the highest of grade when it comes to hardware

Case: found in the trash

Motherboard: some random Asus AM3 board I got as a hand-me down.

CPU: AMD FX-8320E (8 core)

RAM: 16GB

Storage: 5x2tb hdds + 128gb SSD and a 32GB flash drive as a boot device

That’s it… My entire “homelab”

Beautiful. 🫠

1) DIY PC (running everything)

- MSI Z270-A PRO

- Intel G3930

- 16GB DDR4

- ATX PSU 550W

- 250GB SSD for OS

- 500GB SSD for data

- 12TB HDD for backup + media

2) Raspberry pi 4 4GB (running 2nd pihole instance)

Only 2 piHOLES?

Not sure is this a joke, but I dont see a reason to have more than 2.

Sorry forgot the /s

looks like this and runs NetBSD

Services:

- OpenSSH

Why?

I don’t understand, why what my lemmy?

Internet:

- 1G fiber

Router:

- N100 with dual 2.5G nics

Lab:

- 3x N100 mini PCs as k8s control plane+ceph mon/mds/mgr

- 4x Aoostar R7 “NAS” systems (5700u/32G ram/20T rust/2T sata SSD/4T nvme) as ceph OSDs/k8s workers

Network:

- Hodge podge of switches I shouldn’t trust nearly as much as I do

- 3x 8 port 2.5G switches (1 with poe for APs)

- 1x 24 port 1G switch

- 2x omada APs

Software:

- All the standard stuff for media archival purposes

- Ceph for storage (using some manual tiering in cephfs)

- K8s for container orchestration (deployed via k0sctl)

- A handful of cloud-hypervisor VMs

- Most of the lab managed by some tooling I’ve written in go

- Alpine Linux for everything

All under 120w power usage

How are you finding the AooStar R7? I have had my eye on it for a while but not much talk about it outside of YouTube reviews

They’ve been rock solid so far. Even through the initial sync from my old file server (pretty intensive network and disk usage for about 5 days straight). I’ve only been running them for about 3 months so far though, so time will tell. They are like most mini pc manufacturers with funny names though. I doubt I’ll ever get any sort of bios/uefi update

It’s running NetBSD, isn’t it?

I have 5 servers in total. All except the iMac are running Alpine Linux.

Internet

Ziply fiber 100mb small business internet. 2 Asus AX82U Routers running in AiMesh.

Rack

Raising electronics 27U rack

N3050 Nuc’s

One is running mailcow, dnsmasq, unbound and the other is mostly idle.

iMac

The iMac is setup by my 3d printers. I use it to do slicing and I run BlueBubbles on it for texting from Linux systems.

Family Server

Hardware

- I7-7820x

- Rosewill rackmount case

- Corsair water cooler

- 2 4tb drives

- 2 240gb ssd

- Gigabyte motherboard

Mostly doing nothing, currently using it to mine Monero.

Main Cow Server

Hardware

- R7-3900XT

- Rosewill rackmount case

- 3 18tb drives

- 2 1tb nvme

- Gigabyte motherboard

Services

- ZFS 36TB Pool

- Secondary DNS Server

- NFS (nas)

- Samba (nas)

- Libvirtd (virtual macines)

- forgejo (git forge)

- radicale (caldav/carddav)

- nut (network ups tools)

- caddy (web server)

- turnserver

- minetest server (open source blockgame)

- miniflux (rss)

- freshrss (rss)

- akkoma (fedi)

- conduit (matrix server)

- syncthing (file syncing)

- prosody (xmpp)

- ergo (ircd)

- agate (gemini)

- chezdav (webdav server)

- podman (running immich, isso, peertube, vpnstack)

- immich (photo syncing)

- isso (comments on my website)

- matrix2051 (matrix to irc bridge)

- peertube (federated youtube alternative)

- soju (irc bouncer)

- xmrig (Monero mining)

- rss2email

- vpnstack

- gluetun

- qbittorrent

- prowlarr

- sockd

- sabnzbd

Why do you host FreshRSS and MiniFlux if you don’t mind me asking?

I kind of prefer mini flux but I maintain the freshrss package in Alpine so I have an instance to test things.

Thank you. I’m looking at sorting an aggregator out and am leaning towards Miniflux

- An HP ML350p w/ 2x HT 8 core xeons (forget the model number) and 256GB DDR3 running Ubuntu and K3s as the primary application host

- A pair of Raspberry Pi’s (one 3, one 4) as anycast DNS resolvers

- A random minipc I got for free from work running VyOS as by border router

- A Brocade ICX 6610-48p as core switch

Hardware is total overkill. Software wise everything is running in containers, deployed into kubernetes using helmfile, Jenkins and gitea

Pico psu Asrock n100m Eaton3S mini UPS 250gb OS Sata SSD 4x sata 4t SSD’s Pcie sata splitter All in a small PC Case

sever is running YunoHost

At home - Networking

- 10Gbps internet via Sonic, a local ISP in the San Francisco Bay Area. It’s only $40/month.

- TP-Link Omada ER8411 10Gbps router

- MikroTik CRS312-4C+8XG-RM 12-port 10Gbps switch

- 2 x TP-Link Omada EAP670 access points with 2.5Gbps PoE injectors

- TP-Link TL-SG1218MPE 16-port 1Gbps PoE switch for security cameras (3 x Dahua outdoor cams and 2 x Amcrest indoor cams). All cameras are on a separate VLAN that has no internet access.

- SLZB-06 PoE Zigbee coordinator for home automation - all my light switches are Inovelli Blue Zigbee smart switches, plus I have a bunch of smart plugs. Aqara temperature sensors, buttons, door/window sensors, etc.

Home server:

- Intel Core i5-13500

- Asus PRO WS W680M-ACE SE mATX motherboard

- 64GB server DDR5 ECC RAM

- 2 x 2TB Solidigm P44 Pro NVMe SSDs in ZFS mirror

- 2 x 20TB Seagate Exos X20 in ZFS mirror for data storage

- 14TB WD Purple Pro for security camera footage. Alerts SFTP’d to offsite server for secondary storage

- Running Unraid, a bunch of Docker containers, a Windows Server 2022 VM for Blue Iris, and an LXC container for a Bo gbackup server.

For things that need 100% reliability like emails, web hosting, DNS hosting, etc, I have a few VPSes “in the cloud”. The one for my emails is an AMD EPYC, 16GB RAM, 100GB NVMe space, 10Gbps connection for $60/year at GreenCloudVPS in San Jose, and I have similar ones at HostHatch (but with 40Gbps instead of 10Gbps) in Los Angeles.

I’ve got a bunch of other VPSes, mostly for https://dnstools.ws/ which is an open-source project I run. It lets you perform DNS lookup, pings, traceroutes, etc from nearly 30 locations around the world. Many of those are sponsored which means the company provides them for cheap/free in exchange for a backlink.

This Lemmy server is on another GreenCloudVPS system - their ninth birthday special which has 9GB RAM and 99GB NVMe disk space for $99 every three years ($33/year).

I hate you and want all of your equipment

https://pixelfed.social/p/thejevans/664709222708438068

EDIT:

Server:

- AMD 5900x

- 64GB RAM

- 2x10TB HDD

- RTX 3080

- LSI-9208i HBA

- 2x SFP+ NIC

- 2TB NVMe boot drive

Proxmox hypervisor:

- TrueNAS VM (HBA PCIe passthrough)

- HomeAssistant VM

- Debian 12 LXC as SSH entrypoint and Ansible controller

- Debian 12 VM with Ansible controlled docker containers

- Debian 12 VM (GPU PCIe passthrough) with Jellyfin and other services that use GPU

- Debian 12 VM for other docker stuff not yet controlled by Ansible and not needing GPU

Router: N6005 fanless mini PC, 2.5Gbit NICs, pfsense

Switch Mikrotik CRS 8-port 2.5Gbit, 2-port SFP+

You play games on that server don’t you. 😁

I have a Kasm setup with blender and CAD tools, I use the GPU for transcoding video in Immich and Jellyfin, and for facial recognition in Immich. I also have a CUDA dev environment on there as a playground.

I upgraded my gaming PC to an AMD 7900 XTX, so I can finally be rid of Nvidia and their gaming and wayland driver issues on Linux.

Does Immich require a GPU or can it do facial recognition on CPU alone?

It can work on CPU alone, but allows for GPU hardware acceleration.

Nice. I gotta try it.

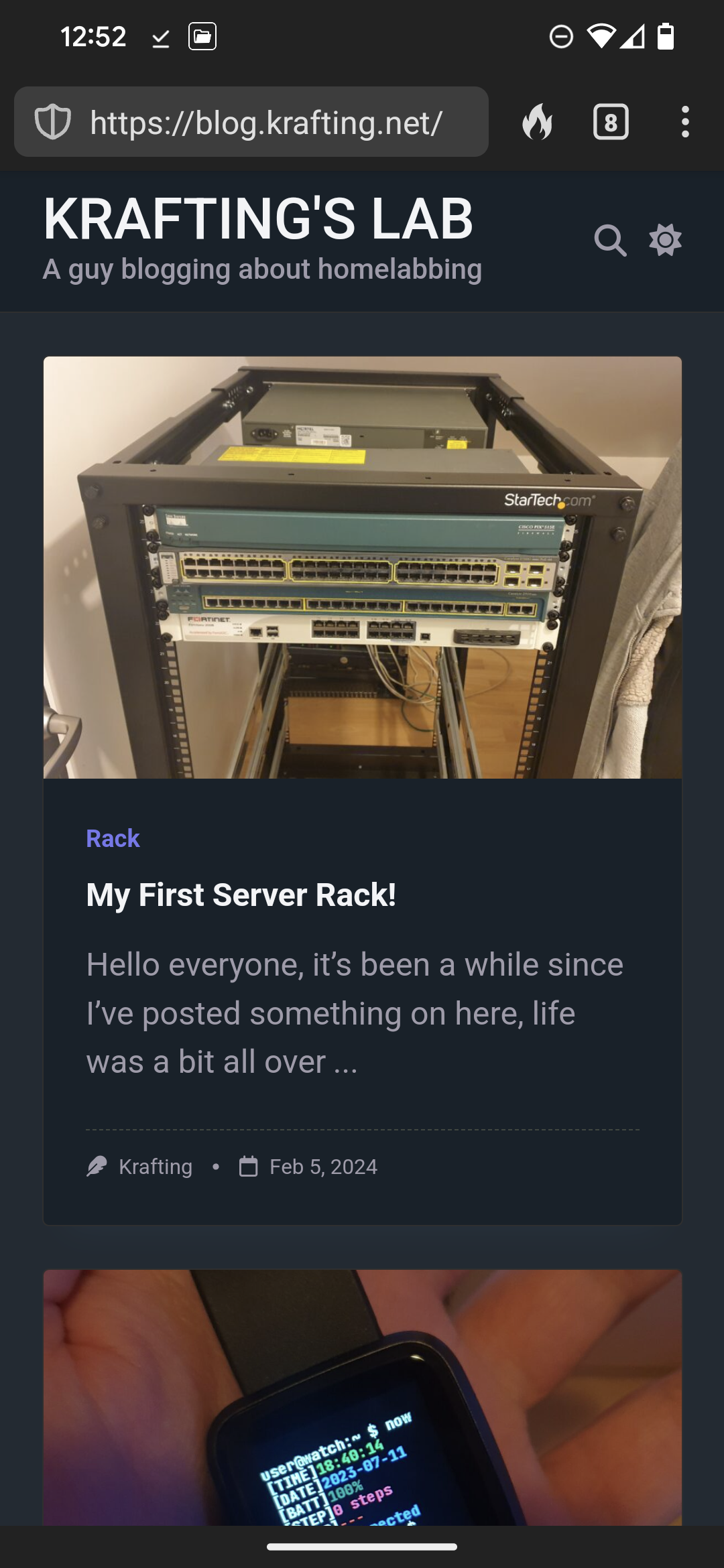

https://blog.krafting.net/my-first-server-rack/

For a few weeks now, it’s been looking like this! (At the bottom there is a complete picture)

Plus a Orange Pi 3 as a DNS/Reverse Proxy server

Your link is not on https and asking me to download a .bin file. Extremely sus

What?

The same thing happened to me when I first tried to go there, but it’s fine now.

Also prompting me to download a .bin

OKey, so that’s a bit concerning… I’d love to get my hand on this “bin” file, I cannot reproduce the issue on my side… Also the site should be HTTPS only. I had a bug with caching recently that showed the ActivityPub data instead of the blog post, could it be that ? Are you on mobile, and the browser cannot show JSON data properly so it tries to download it with a weird name ?

I am on Android mobile. Firefox only prompts to download downloadfile.bin. Duckduckgo browser actually opens the file contents. I’ll post it here, since I’m getting it from public I’m hoping that’s okay. This is the content…

{“@context”:[“https://www.w3.org/ns/activitystreams”,{“Hashtag”:“as:Hashtag”}],“id”:“https://blog.krafting.net/my-first-server-rack/”,“type”:“Note”,“attachment”:[{“type”:“Image”,“url”:“https://blog.krafting.net/wp-content/uploads/2024/02/603fb502-9977-461f-92c6-7375055fdec6-min-scaled.jpg”,“mediaType”:“image/jpeg”},{“type”:“Image”,“url”:“https://blog.krafting.net/wp-content/uploads/2024/02/20240129_184909-min-scaled.jpg”,“mediaType”:“image/jpeg”},{“type”:“Image”,“url”:“https://blog.krafting.net/wp-content/uploads/2024/02/20240129_185338-min-scaled.jpg”,“mediaType”:“image/jpeg”},{“type”:“Image”,“url”:“https://blog.krafting.net/wp-content/uploads/2024/02/20240129_193432-min-scaled.jpg”,“mediaType”:“image/jpeg”}],“attributedTo”:“https://blog.krafting.net/author/admin/”,“content”:“\u003Cp\u003E\u003Cstrong\u003EMy First Server Rack!\u003C/strong\u003E\u003C/p\u003E\u003Cp\u003E\u003Ca href=\u0022https://blog.krafting.net/my-first-server-rack/\u0022\u003Ehttps://blog.krafting.net/my-first-server-rack/\u003C/a\u003E\u003C/p\u003E\u003Cp\u003E\u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/homelab/\u0022\u003E#homelab\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/management/\u0022\u003E#management\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/networking/\u0022\u003E#networking\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/rack/\u0022\u003E#rack\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/server/\u0022\u003E#server\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/startech/\u0022\u003E#startech\u003C/a\u003E\u003C/p\u003E”,“contentMap”:{“en”:“\u003Cp\u003E\u003Cstrong\u003EMy First Server Rack!\u003C/strong\u003E\u003C/p\u003E\u003Cp\u003E\u003Ca href=\u0022https://blog.krafting.net/my-first-server-rack/\u0022\u003Ehttps://blog.krafting.net/my-first-server-rack/\u003C/a\u003E\u003C/p\u003E\u003Cp\u003E\u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/homelab/\u0022\u003E#homelab\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/management/\u0022\u003E#management\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/networking/\u0022\u003E#networking\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/rack/\u0022\u003E#rack\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/server/\u0022\u003E#server\u003C/a\u003E \u003Ca rel=\u0022tag\u0022 class=\u0022hashtag u-tag u-category\u0022 href=\u0022https://blog.krafting.net/tag/startech/\u0022\u003E#startech\u003C/a\u003E\u003C/p\u003E”},“published”:“2024-02-05T19:10:19Z”,“tag”:[{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/homelab/”,“name”:“#homelab”},{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/management/”,“name”:“#management”},{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/networking/”,“name”:“#networking”},{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/rack/”,“name”:“#rack”},{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/server/”,“name”:“#server”},{“type”:“Hashtag”,“href”:“https://blog.krafting.net/tag/startech/”,“name”:“#startech”}],“updated”:“2024-02-05T19:22:17Z”,“url”:“https://blog.krafting.net/my-first-server-rack/”,“to”:[“https://www.w3.org/ns/activitystreams#Public”,“https://blog.krafting.net/wp-json/activitypub/1.0/users/1/followers”],“cc”:[]}

I can erase the direct post link and then the site loads, but then if I click the post title it loads the text again…

Okey so the “bin” is actually the activitypub data… I don’t know why this is still happening… there might be something wrong somewhere, but where…

I am on mobile, and it does not open

OKey, so that’s a bit concerning… I’d love to get my hand on this “bin” file, I cannot reproduce the issue on my side… Also the site should be HTTPS only. I had a bug with caching recently that showed the ActivityPub data instead of the blog post, could it be that ? Are you on mobile, and the browser cannot show JSON data properly so it tries to download it with a weird name ?

I’m not really a networking expert so I can’t make too good of a guess as to what happened. I’m on the latest Firefox mobile release on Android and was accessing from a Colorado IP. When I originally tried the site, nothing was rendered. It was a blank page or just a redirect for download. I didn’t download the .bin. I clicked your link twice before sending my message.

Well, thanks for the follow-up anyway, I did some tweaks, and I hope it won’t happen again… I’ll see.