bahmanm

Husband, father, kabab lover, history buff, chess fan and software engineer. Believes creating software must resemble art: intuitive creation and joyful discovery.

Views are my own.

- 55 Posts

- 105 Comments

Besides the fun of stretching your mental muscles to think in a different paradigm, Forth is usually used in the embedded devices domain (like that of the earlier Mars rover I forgot the name of).

This project for me is mostly for the excitement and joy I get out of implementing a Forth (which is usually done in Assembler and C) on the JVM. While I managed to keep the semantics the same the underlying machinery is vastly different from, say, GForth. I find this quite a pleasing exercise.

Last but not least, if you like concatenative but were unable to practice fun on the JVM, bjForth may be what you’re looking for.

Hope this answers your question.

Whoa! This is pretty rad! Thanks for sharing!

That’s definitely an interesting idea. Thanks for sharing.

Though it means that someone down the line must have written a bootstrap programme with C/Assembler to run the host forth.

In case of jbForth, I decided to write the bootstrap too.

That’s impossible unless you’ve got a Forth machine.

Where the OS native API is accessible via C API, you’re bound to write, using C/C++/Rust/etc, a small bootstrap programme to then write your Forth on top of. That’s essentially what bjForth is at the moment: the bootstrap using JVM native API.

Currently I’m working on a set of libraries to augment the 80-something words bjForth bootstrap provides. These libraries will be, as you suggested, written in Forth not Java because they can tap into the power of JVM via the abstraction API that bootstrap primitives provide.

Hope this makes sense.

Haha…good point! That said bjForth is still a fully indirect threaded Forth. It’s just that instead of assembler and C/C++ it calls Java API to do its job.

2·1 year ago

2·1 year agoThanks for the pointer! Very interesting. I actually may end up doing a prototype and see how far I can get.

Good question!

IMO a good way to help a FOSS maintainer is to actually use the software (esp pre-release) and report bugs instead of working around them. Besides helping the project quality, I’d find it very heart-warming to receive feedback from users; it means people out there are actually not only using the software but care enough for it to take their time, report bugs and test patches.

“Announcment”

It used to be quite common on mailing lists to categorise/tag threads by using subject prefixes such as “ANN”, “HELP”, “BUG” and “RESOLVED”.

It’s just an old habit but I feel my messages/posts lack some clarity if I don’t do it 😅

1·2 years ago

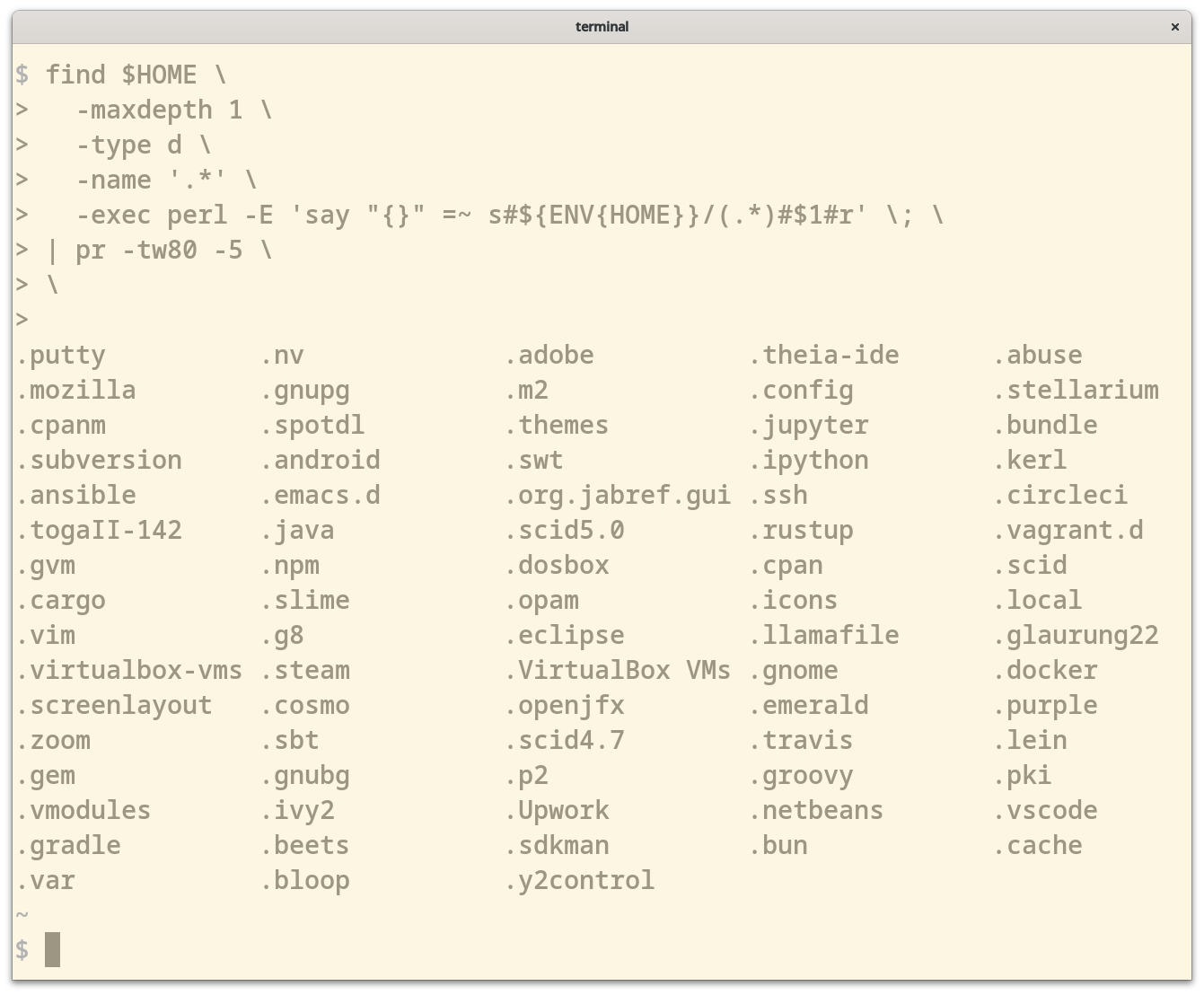

1·2 years agoI usually capture all my development-time “automation” in Make and Ansible files. I also use makefiles to provide a consisent set of commands for the CI/CD pipelines to work w/ in case different projects use different build tools. That way CI/CD only needs to know about

make build,make test,make package, … instead of Gradle/Maven/… specific commands.Most of the times, the makefiles are quite simple and don’t need much comments. However, there are times that’s not the case and hence the need to write a line of comment on particular targets and variables.

1·2 years ago

1·2 years agoCan you provide what you mean by check the environment, and why you’d need to do that before anything else?

One recent example is a makefile (in a subproject), w/ a dozen of targets to provision machines and run Ansible playbooks. Almost all the targets need at least a few variables to be set. Additionally, I needed any fresh invocation to clean the “build” directory before starting the work.

At first, I tried capturing those variables w/ a bunch of

ifeqs,shells anddefines. However, I wasn’t satisfied w/ the results for a couple of reasons:- Subjectively speaking, it didn’t turn out as nice and easy-to-read as I wanted it to.

- I had to replicate my (admittedly simple)

cleantarget as a shell command at the top of the file.

Then I tried capturing that in a target using

bmakelib.error-if-blankandbmakelib.default-if-blankas below.############## .PHONY : ensure-variables ensure-variables : bmakelib.error-if-blank( VAR1 VAR2 ) ensure-variables : bmakelib.default-if-blank( VAR3,foo ) ############## .PHONY : ansible.run-playbook1 ansible.run-playbook1 : ensure-variables cleanup-residue | $(ansible.venv) ansible.run-playbook1 : ... ############## .PHONY : ansible.run-playbook2 ansible.run-playbook2 : ensure-variables cleanup-residue | $(ansible.venv) ansible.run-playbook2 : ... ##############But this was not DRY as I had to repeat myself.

That’s why I thought there may be a better way of doing this which led me to the manual and then the method I describe in the post.

running specific targets or rules unconditionally can lead to trouble later as your Makefile grows up

That is true! My concern is that when the number of targets which don’t need that initialisation grows I may have to rethink my approach.

I’ll keep this thread posted of how this pans out as the makefile scales.

Even though I’ve been writing GNU Makefiles for decades, I still am learning new stuff constantly, so if someone has better, different ways, I’m certainly up for studying them.

Love the attitude! I’m on the same boat. I could have just kept doing what I already knew but I thought a bit of manual reading is going to be well worth it.

1·2 years ago

1·2 years agoThat’s a great starting point - and a good read anyways!

Thanks 🙏

1·2 years ago

1·2 years agoAgree w/ you re trust.

1·2 years ago

1·2 years agoThanks. At least I’ve got a few clues to look for when auditing such code.

1·2 years ago

1·2 years agoOh, neat!

On another note: ~2 mins looks like rather a “long” window of maintenance/disruption for what Cloudflare is 🙈

1·2 years ago

1·2 years agoUpdate 1

lemmy.one is added to lemmy-meter 🥳

Please do reach out if you’ve got feedback/suggestions/ideas for a better lemmy-meter 🙏

You can always find me and other interested folks in

2·2 years ago

2·2 years agoOh, sorry to hear that 😕

I think I’ll just go ahead and add you folks to lemmy-meter for now. In case you want to be removed, it should take only a few minutes.

I’ll keep this thread posted once things are done.

2·2 years ago

2·2 years agoIt’s on 🥳

If you’ve got questions/feedback/ideas please drop a line in either

Thanks for showing interest 🙏

3·2 years ago

3·2 years agoUpdate

sh.itjust.works in now added to lemmy-meter 🥳 Thanks all.

133·2 years ago

133·2 years agoSomething that I’ll definitely keep an eye on. Thanks for sharing!

Not really I’m afraid. Effects can be anywhere and they are not wrapped at all.

In technical terms it’s stack-oriented meaning the only way for functions (called “words”) to interact with each other is via a parameter stack.

Here’s an example:

TIMES-10is a word which pops one parameter from stack and pushes the result of its calculation onto stack. The( x -- y)is a comment which conventionally documents the “stack effect” of the word.Now when you type

12and press RETURN, the integer 12 is pushed onto stack. ThenTIMES-10is called which in turn pushes10onto stack and invokes*which pops two values from stack and multiplies them and pushes the result onto stack.That’s why when type

.Sto see the contents of the stack, you get120in response.Another example is

This simple example demonstrates the reverse Polish notation (RPN) Forth uses. The arithmetic expression is equal to

5 * (20 - 10)the result of which is pushed onto stack.PS: One of the strengths of Forth is the ability to build a vocabulary (of words) around a particular problem in bottom-to-top fashion, much like Lisp. PPS: If you’re ever interested to learn Forth, Starting Forth is a fantastic resource.